Journal of Creation 26(3):107–114, December 2012

Browse our latest digital issue Subscribe

Information Theory—part 2: weaknesses in current conceptual frameworks

The origin of information is a problem for the theory of evolution. But the wide, and often inconsistent, use of the word information often leads to incompatible statements among Intelligent Design and creation science advocates. This hinders fruitful discussion. Most information theoreticians base their work on Shannon’s Information Theory. One conclusion is that the larger genomes of higher organisms require more information, and raises the question whether this could arise naturalistically. Lee Spetner claims no examples of information-increasing mutations are known, whereas most ID advocates only claim that not enough bits of information could have arisen during evolutionary timescales. It has also been proposed that nature reflects the intention of the Creator, and therefore all lifeforms might have the same information content. Gitt claims information can’t be measured. The underlying concepts of these theoreticians were discussed in part 1 of this series. In part 3 a solution will be offered for the difficulties documented here.

Origin of life researcher Dr Küppers defines life as matter plus information.1 Having a clear and common understanding of what we mean by information is necessary for a fruitful discussion about its origin. But in part 1 of this series I pointed out that various researchers of evolutionary and creationist persuasion give the word very different definitions.2 Creation Magazine often draws attention to the need for information-adding mutations if evolutionary theory is true, for example:

“Slow and gradual evolutionary modification of these crucial organs of movement would require many information-adding mutations to occur in just the right places at just the right times.”3

What does information mean? Williams introduced many useful thoughts in this journal in a three-part series on biological information.4-6 Consistent with the usage of information above, he points out that

“Creationists commonly challenge evolutionists to explain how vast amounts of new information could be produced that would be required to turn a microbe into a microbiologist.”7

On the same page he adds, “But the extra wings arose from three mutations that switched off existing developmental processes. No new information was added. Nor was any new capability/functionality achieved.”

I understand and agree with the intuition behind this usage of the word information. Nevertheless, even literature sold by Creation Ministries International, such as MIT Ph.D. Lee Spetner’s classic Not by Chance!,8 are not using information in the same sense. Spetner is an expert on Shannon’s Theory of Communication (information) and is one of the most lucid writers on its application.

Sometimes creationists (e.g. Gitt) state that information cannot, in principle, arise naturally whereas others (e.g. Stephen Meyer, Lee Spetner) are saying that not enough could arise for macro-evolutionary purposes.2

The view that not enough time was available to add the necessary information found in genomes (based on one definition of information) becomes clouded when Williams argues that “the Darwinian arguments are without force, since it is clear that organisms are designed to vary.”9,10 Behind this reasoning lies a different usage of information.

Williams even implies that information cannot be quantified at all:

“… a new, useful enzyme will not contain more information than the original system because the intention remains the same—to produce enzymes with variable amino acid sequences that may help in adapting to new food sources when there is stress due to an energy deficit.”9

And in another place,

“Since (at this stage in human history) only God can make microbes and only God can make humans, perhaps it actually takes the same amount of information to make a bacterium as it does to make a human. That ‘same amount of information’ is the Creator Himself!”11

I believe that approach should be reconsidered, especially if intention is defined in such generic, broad terms. Suppose the intention is to help one’s daughter get better grades at school. The above suggestion seemingly assigns the same amount of information whether a two-minute verbal explanation is offered, or years of private tutoring over many topics.

I believe most of Williams’ intuitions are right, but hope the model given in the third part of this series will bring the pieces together in a more unified manner. Williams suggests that other codes are present in the cell environment in addition to the one used by DNA. He once made the significant statement:

“God created functional adult organisms of non-coded structural information in adult baramins. We could, in theory, quantify this information using an algorithmic approach, but for practical purposes it is enough to note that it is enormous and non-coded.”11

I agree that information can also be non-coded, but it is not apparent how an algorithmic measure of information could be used, a topic Bartlett has devoted effort to.12

The precise definition of information has dramatic consequences on the conclusions reached. Gitt believes information cannot be quantified. Others believed it can, and in exact detail. Weber, Claude Shannon’s thesis supervisor, had this to say:

“It seems very reasonable to want to say that three relays could handle three times as much information as one. And this indeed is the way it works out if one uses the logarithmic definition of information.”13

When asked by creationists if he knew of any biological process that could increase the information content of a genome, Dawkins could not answer the question.6,14 He subscribes to Shannon’s definition of information and understands the issue at stake, writing later:

“Therefore the creationist challenge with which we began is tantamount to the standard challenge to explain how biological complexity can evolve from simpler antecedents.”15

Several years ago Answers in Genesis sponsored a workshop on the topic of information. Werner Gitt proposed we try to find a single formulation everyone could work with. This challenge remains remarkably difficult, because people routinely use the word in different manners.

In 2009, Gitt offered the following definition for information in this journal,16 which at the advice of Bob Crompton he now calls Universal Information (UI).

“Information is always present when all the following five hierarchical level are observed in a system: statistics, syntax, semantics, pragmatics and apobetics.”

Let us call this Definition 1. Gitt also states that he now uses UI and information interchangeably.17

I have collaborated with Werner Gitt during the last 25 years of so on various topics, and the comments which follow are not to be construed as criticism against him or his work.18 At times it seems there is a discrepancy between what he means and how it is expressed on paper.19 Considerable refinement has occurred in his thinking, and I hope to contribute by a critical but constructive attempt at further improvement.

The variety of usages of the word information continues to trap us. When Gitt wrote:

“Theorem 3. Information comprises the nonmaterial foundation for all technological systems and for all works of art”20

and

“Remark R2: Information is the non-material basis for all technological systems”,21

he appears to have switched to another (valid but different) usage of the word information. For example, it is not apparent why valuable technologies like the first axe, shovel, or saw depended on the coded messages (statistics, syntax) portion of his definition of information, a definition which seems to require all five hierarchical levels to be present.

As another example of inconsistent, or at least questionable, usage of the word, we read that

“… the information in living things resides on the DNA molecule.”22

The parts of the definition of information which satisfy apobetics (purpose, result) do not reside on DNA. External factors enhance and interplay with what is encrypted and indirectly implied on DNA, but apobetics is not physically present there. To illustrate, neuron connections are made and rearranged as part of dynamic learning, interacting with external cues and input, but the effects are neither present nor implied on DNA.

Another important claim needs to be evaluated carefully. Gitt often states the premise that

“The storage and transmission of information requires a material medium.”23

It is true that non-material messages can be coded and impregnated on material media. But information can be relayed over various communication channels. Must all of them be material based? If so, then all, or virtually all, the information-processing components in intelligent minds could only be material. Let us see why.

Suppose one wishes to translate ‘ridiculous’ into German. The intention to translate, and the precise semantic concept itself, are surely encoded and stored somewhere. This intention must be transmitted elsewhere to other reasoning facilities, where a search strategy will also be worked out. All of this occurs before the search request is transmitted into the physical brain, but information is already being stored and transmitted in vast amounts.

Furthermore, is the mind/brain interface, part of the transmission path, 100% material?23 We begin to see that Gitt’s statement seems to imply that wilful decision making and the guidance of decisions must be material phenomena.

Now, as soon as the German word lächerlich is extracted from the biological memory bank,24 it must be transferred from the brain’s apparatus into the wilful reasoning equipment and compared to the ‘information’ which prompted the search. A huge amount of mental processing (i.e. data storage and transmission) will now occur: are the English and German words semantically synonymous for some purpose, or should more words be searched for?

Irrwitzig could be a new candidate, but which translation is better? What are all the associations linked to both German words? Should more alternatives be looked up in a dictionary? Finally, decisions will be performed as to what to do with the preferred translation (stored as the top choice and mentally transmitted to processing components where the intended outcome will be planned).25

More to the point, must angels, God, and the soul rely on a material medium to store and transmit information?

This objection is serious, because of the frequent statements that all forms of technology and art are illustrations of information. An artist can wordlessly decide to create an abstract painting. Where are the statistics, syntax, and semantics portion of the definition of UI? If in the mentally coded messages (which we read above must be material) then either UI is material based or all aspects of created art and tool-making (technology) need not be UI.

In part 3 of this series I’ll offer a simple solution to these issues.

Gitt offers a new definition for UI in his 2011 book Without Excuse:

“Universal Information (UI) is a symbolically encoded, abstractly represented message conveying the expected actions(s) and the intended purposes(s). In this context, ‘message’ is meant to include instructions for carrying out a specific task or eliciting a specific response [emphasis added].”26

Let us call this Definition 2. This resembles one definition of information in Webster’s Dictionary: “The attribute inherent in and communicated by alternative sequences or arrangements of something that produce specific effects.”

I don’t believe Definition 2 is adequate yet. Only verbal communication seems to be addressed. It implies that the symbolically encoded message itself must convey the expected actions and intended purposes, but in part 3 I’ll show that this need not be, and is probably never completely true. Sometimes the coded instructions themselves do convey portions of the expected actions and purpose. This is observed when the message communicates how machines are to be produced which are able to process remaining portions of the message (like DNA encoding the sequence data for the RNA and proteins needed to produce the decoding ribosome machinery). I would agree that the messages often contribute to, but do not necessarily themselves specify the purpose. Communicating all the necessary details would be impractical.

Consider the virus as an example. The expected actions(s) and the intended purpose(s) are not communicated by the content of their genomes, nor are the instructions to decode the implied protein (the necessary ribosomes are provided from elsewhere). Some viruses do provide instructions to permit insertion into the host genome and other intermediary outcomes which can contribute to, but not completely specify, the final intended purposes.

Another difficulty with Definition 2 is that it does not distinguish between push and pull forms of coded interactions. The code message, ‘What is the density of benzene?’ could be sent to a database. This message, a pull against an existing data source, does not convey the expected actions(s) or the intended purposes.

Of the researchers discussed in part 1 of this series, Gitt’s model offers the broadest framework for a theory of information for the purposes of analyzing the origin of life. He has refined his thoughts continually over the years, but I fear the value will soon plateau out without the change of direction we’ll see in part 3. One reason is that it won’t permit quantitative conclusions.

If an evolutionist is convinced that all life on Earth derived from a single ancestor, then ultimately all the countless examples of DNA-based life are only the results of one single original event. Therefore, Gitt’s elevation of his theorems to laws will seem weak compared to the powerful empirical and mathematically testable laws of physics, for which so many independent examples can be found and validated quantitatively.27 I’m sure Gitt’s Scientific Laws of Information (SLI) will never be disproven because my analysis (introduced in part 3 and 4 of this series) of what would be required to create code-based systems makes their existence without intelligent guidance absurdly improbable. Others may find my reasoning in part 3 more persuasive than calling observed code-based principles laws, since they seem to be based on such limited datasets.

The contributions of other information theoreticians are quantifiable. Although limited to the lower portions of Gitt’s five hierarchies, I find much merit in them, and their ideas can be included as part of a general-purpose theoretic framework (see part 3). When Gitt wrote:

“To date, evolutionary theoreticians have only been able to offer computer simulations that depend upon principles of design and the operation of pre-determined information. These simulations do not correspond to reality because the theoreticians smuggle their own information into the simulations.”

It is not clear, based on his own definition, what was meant by ‘pre-determined information’. I will show in part 3 that the path towards pragmatics and apobetics can be aided with resources which do not rely on the lower levels (statistics, syntax, and semantics). The notion of information being smuggled into a simulation is widely discussed in the literature, and very competently by Dembski and Marks,28 who show how the contribution by intelligent intervention can be quantified.

Absurdly, Thomas Schneider claims his “simulation begins with zero information”29 and

“The ev model quantitatively addresses the question of how life gains information, a valid issue recently raised by creationists (R. Truman, www.trueorigin.org/dawkinfo.htm) but only qualitatively addressed biologists.”30,31

Schneider’s simulations only work because they were designed to do so, and are intelligently guided.32 This has been quantitatively addressed by William Dembski.32 Furthermore, the framework in part 3 will show that Gitt’s higher levels can also be quantified.

Gitt’s four most important Scientific Laws of Information, published in this journal,17,18 are:

SLI-1: A material entity cannot generate a non-material entity.

SLI-2: Universal information is a non-material fundamental entity.

SLI-3: Universal information cannot be created by statistical processes.

SLI-4: Universal information can only be produced by an intelligent sender.

Can we be satisfied that these are robustly formulated according to Definitions 1 and 2, above? For SLI-1 the question of complete conversion of matter into energy should be addressed.

What about SLI-2 through SLI-4? I see no chance they would be falsified if we were to replace ‘Universal information’ by ‘coded messages’, which is integrated into UI. With a slight change in focus, introduced in part 3, I believe a stronger case can be made.

SLI-2–SLI-4 using Definition 1

For SLI-2 it is unclear what entity means, since the definition says, “Information is always present when …” and the grammar does not permit the thoughts to be linked. Since apobetics is not provided by the entity making use of DNA, this definition still needs work. Nevertheless, the definition includes the thought ‘in a system’ and this is a major move in the right direction (see part 3).

SLI-3 surely can’t be falsified, since the definition requires the presence of apobetics, which seems incompatible with statistical processes. There seems to be a tautology here, since statistical processes describe outcomes with unknown precise causes, whereas apobetics is a deliberate intention.

SLI-4 makes a lot of sense, but only if one understands UI to refer to a multi-part system and not an undefined entity.

SLI-2–SLI-4 using Definition 2

For SLI-2 it is unclear what entity means, presumably the message. But it is questionable that the message must be responsible to convey the expected actions(s) and the intended purpose(s). Decision-making capabilities could exist a priori on the part of the receiver, who pulls a coded message from a sender, and then performs the appropriate actions and purposes. The actions and purposes need not be conveyed by the message. Cause and effect here can be reversed.

Example 1. The receiver wishes to know what time it is. A coded message is sent back. The receiver alone decides what to do with the content of the message.

Example 2. A rich man compares prices of various cars, airplanes, and motorboats. The coded information sent back (prices) does not convey the expected actions(s) nor the intended purposes(s). The man provides the additional input, not the message!

SLI-3 and SLI-4 make sense.

Value of Shannon’s work is underrated

Much criticism is voiced in the creation science literature about Shannon’s definition of information, which he preferred to call communication, dealing as it does with only the statistical characteristics of the message symbols. Given Shannon’s goal of determining maximum throughput possible over communication channels under various scenarios, it is true that the meaning and intention of the messages play no role in his work.

My concern is that I suspect his critics may have overlooked some deeper implications which Shannon himself did not draw attention to. There are good reasons why all the researchers mentioned8 in part 1 use expressions like, “according to information theory” or, “the information content is” when discussing their analysis of biological sequences like proteins. Implicit in these researcher’s comments are notions of goals, purpose, and intent. These are notions associated with information in generic, layman terms. Information theory inevitably refers to Shannon’s work, even though the claims made about his work cannot be found directly in his own pioneering publications.

Are there reasons why the goal-directing effects of coded messages, like mRNA, remind us of Shannon’s information theory? Is the wish to attain useful goals, intentionally implied in Shannon’s work? The answer is yes. Here are some examples:

- Corruption of the intended message. The series of symbols (messages) transmitted can be corrupted in route. Shannon devotes considerable effort in analysing the effects of noise and how much of the original, intended message can be retrieved. But why should inanimate nature care what symbols were transmitted? Implicit is that there is a reason for transmitting specific messages.

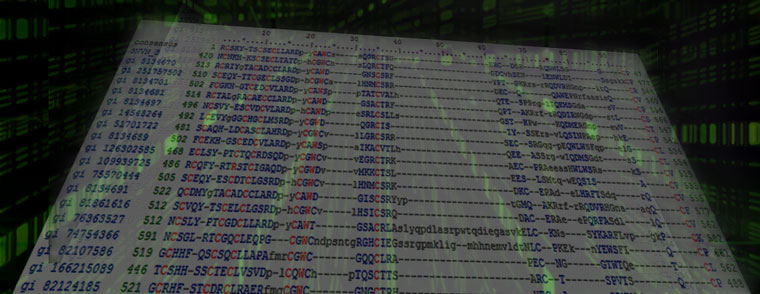

- Optimal use of a communication channel. If there are patterns in the strings of symbols to be communicated, then better codes can often be devised. Suppose an alphabet consists of four symbols, used to communicate sequences of DNA nucleotides (abbreviated A, C, G, or T) or perhaps to identify specific quadrants at some location. Statistical analysis can reveal the probabilities, p, which need to be transmitted, e.g. A (p = 0.9), C (p = 0.05), G (p = 0.04), and T (p = 0.01). We decide to devise a binary code. We could assign a two-bit codeword (00, 01, 10, 11) to each symbol, so that on average a message requires two bits per symbol.

However, more compact codes could be devised for this example. Let us invent one and assign the shorter codewords to the symbols which need to be transmitted more often: A = 0; C = 10; G = 111; T = 110. A message is easily decoded without needing any spacers. For example, 0010011000010 can only represent AACATAAAC. On average, messages using this coding convention will have a length of 1 x 0.9 + 2 x 0.05 + 3 x 0.04 + 3 x 0.01 = 1.15 bits/ symbol, a considerable improvement.

Implicit in this analysis is that it is desirable for some purpose to be able to transmit useful content and to minimize waste of the available bandwidth. It is also implied that an intelligent engineer will be able to implement the new code, an assumption which makes no sense in inanimate nature.33

- Calculation of joint entropy and conditional entropy. Various technological applications exist for mathematical relationships such as joint and conditional entropies. Calculating these require knowing about the messages sent and those received. Nature has no way or reason to do this. By performing these calculations one senses that intelligent beings are analyzing something and for a purpose.

Warren Weaver, Shannon’s mentor professor and co-author of the book edition published in 1949, discerned that meaning and intentionality are implied in their work. In the portion he wrote we read,

“But with any reasonably broad definition of conduct, it is clear that communication either affects conduct or is without any discernible and probable effect at all.”34

And Gitt’s work is foreshadowed in insights like:

“Relative to the broad subject of communication, there seems to be problems at three levels. Thus it seems reasonable to ask, serially,

“LEVEL A: How accurately can the symbols of communication be transmitted? (The technical problem.)

“LEVEL B. How precisely do the transmitted symbols convey the desired meaning? (The semantic problem.)

“LEVEL C. How effectively does the received meaning affect conduct in the desired way? (The effectiveness problem.)”35

Concern about Shannon’s initiative

Two reasons are often mentioned for claiming information theory has no relevance to common notions of information:

- “More entropy supposedly indicates more information.” But how can this be, since a crystal with high regularity surely contains much order and little information? And the chaos-increasing effects of a hurricane surely destroy organization and information.

- “Longer messages imply more information.” Really? Does the message ‘Today is Monday’ provide less information than ‘Today is Monday and not Tuesday’? Or less than ‘Tdayy/$ *!aau!##$ is Modddndday’?

These two objections, commonly encountered, reflect a weak understanding of the topic and prevent extracting a significant amount of value available.

For purposes of creation-vs-evolution discussions, a good suggestion is to profit from the mathematics Shannon drew attention to but avoid referring to information theory entirely. Shannon himself only used the phrase theory communication later in his life. For most purposes we are interested in probability issues: how likely are naturalist scenarios, based on specific mechanisms?

Generally, we can limit ourselves to three simple equations which are not unique contributions from Shannon.

The definition of entropy was already developed for the field of statistical thermodynamics:

H refers here to the entropy per symbol, such as the entropy of each of the four nucleotides on DNA.

The Shannon–MacMillan–Breitmann Theorem is useful to calculate the number of high-probability sequences of length N symbols, having an average entropy H per symbol:

An example is shown in table 1. When the distribution of all possible symbols, s, at each site is close to fully random, the number of messages calculated by 2NH and sN are reasonably similar. The symbols could be amino acids, nucleotides or codons. Eqn (2) is important for low entropy sets, see table 1.

The difference in entropy at each site along two sequences is of paramount interest:

where H0 is the entropy at the Source and Hf at the Destination. To analyze proteins, these two entropies refer to amino acid frequencies, calculated at each aligned site. The sequences used to calculate Hf perform the same biological function. Following a suggestion by Kirk Durston, let us call H0 – Hf the Functional Information36 at a site. The sum over all sites is the Functional Information of the sequence.

What does eqn. (3) tell us? If the entropy of a Source is unchanged, the lower the entropy which is observed at a receiver, the higher the Functional Information involved (figure 1).

On the other hand, if the entropy of a receiver is unchanged, the higher the entropy which is observed at a source, the higher the Functional Information involved (figure 2).

The ideas expressed in these three equations can be applied in various manners. Suppose the location at which arrows land on a target is to be communicated via coded messages (figure 3). A very general-purpose design would permit all locations in three dimensions over a great distance to be specified at great precision, applicable to target practice with guns, bows, or slingshots. The entropy of the Source would now be very great.

Another design would limit what could be communicated to small square areas on a specific target, with one outcome indicating the target was missed entirely. The demands on this Source would be much smaller, its entropy more limited, and the messages correspondingly simpler.

A variant design would treat each circle on the target as functionally equivalent, restricting the range of potential outcomes which need to be communicated by the Source even more.

To prepare our thinking for biological applications, suppose the Source can communicate locations anywhere within 100 m to high precision, and that we know very little about the target. We wish to know how much skill is implied to attain a ‘bullseye’. Anywhere within this narrow range is considered equivalent. We are informed of every outcome and whether a bullseye occurred. We can use eqn (1) to calculate H0 for all locations communicated and the entropy of the bullseye, Hf. Eqn. (3) is the measure of interest, and eqn. (2) can be used to determine the proportion of desired–to–non-desired outcomes.

Of much interest for creation research is the proportion of the bullseye region represented by functional proteins. This is calculated as follows. A series of sequences for the same kind of protein from different organisms are aligned, and the probability of finding each of the 20 possible amino acids, pi, is calculated at each site. The entropy at each site is then calculated using (1), the value of which is Hf in eqn (3). The average entropy of all amino acids being coded by DNA for all proteins is the H0 in eqn (3).

To these three equations let us add three suggestions:

- Always be clear whether entropy refers to the collection of messages being generated by the Source; the entropy of the messages received at the Destination; or the resulting entropy of objects resulting from processing the messages received.

- Take intentionality into account when interpreting entropies.

- Work with bits no matter what code is used. A bit of data can communicate a choice between two possibilities; two bits, a choice from among four alternatives; and n bits, a choice from among 2n possibilities. If the messages are two bits long and each symbol (0 or 1) are equiprobable, it is impossible to specify correctly one of eight possible outcomes.

The symbols used by a code are part of its alphabet. The content of messages based on non-binary codes can also be expressed in bits, and the messages could be transformed into a binary code. For example, DNA uses four symbols (A,C,G,T), so each symbol can be specific up to 2 bits per position. Therefore, a message like ACCT represents 2 + 2 + 2 + 2 = 8 bits, so 28 = 256 different messages of length four could be created from the alphabet (A,C,G,T). This can be confirmed by noting that 44 = 256 different alternatives are possible.

We are now armed to clarify some confusion and to perform useful calculations. The analysis is offered as the on-line Appendix 1.37 Appendix 237 (also on-line) discusses whether mutations plus natural selection could increase information, using a Shannon-based definition of information.

Conclusion

People discuss frequently an immaterial entity called information. Information Theory usually refers to Shannon’s work. The many alternative meanings of the word lead to ambiguity, and detract from the issue of its origin. What could be meant when one claims many copies of the same information does not increase its quantity? It cannot refer to Shannon’s theory. Information in this case could mean things like the explanatory details or know-how to perform a task, usable by an intelligent being. Shannon’s model, however, claims that two channels transmitting the same messages convey twice as much information as only one would.

What about a question like, ‘Where does the information come from in a cell or to run an automated process?’ Here information could mean the coded instructions or know-how which guide equipment and leads to useful results.

The discussion in parts 1 and 2 of this series is not meant to favour nor criticize how others have chosen to interpret the word information. Many valuable insights can be gleaned for this literature. For purposes of gaining a broader view of all the components involved in directing processes to a desired outcome, I felt the need to move in another direction, which will be explained in parts 3 and 4.

The evolutionary community is uncomfortable with the topic of information, but the issue is easier to ignore when there is disagreement on very basic issues, such as whether it can be quantified and whether higher life-forms contain more information or not.

Covering so many notions with the same word is problematic, and in part 3 a solution will be proposed.

References and notes

- Küppers, B.-O., Leben—Physik + Chemie? Piper-Verlag, München, 2nd edn, p. 17, 1990. Return to text.

- Truman, R., Information Theory part 1: overview of key ideas, J. Creation 26(3):101–106, 2012. Return to text.

- Bell, P., Mudskippers, marvels of the mud-flats! Creation 34(2):48–50, 2012. Return to text.

- Williams, A., Inheritance of biological information part I: the nature of inheritance and of information, J. Creation (formerly TJ) 19(2):29–35, 2005. Return to text.

- Williams, A., Inheritance of biological information part II: redefining the information challenge , J. Creation (formerly TJ) 19(2):36–41, 2005. Return to text.

- Williams, A., Inheritance of biological information part III: control of information transfer and change, J. Creation (formerly TJ) 19(3):21–29, 2005. Return to text.

- Williams, ref. 4, p. 36. Return to text.

- Spetner, L., Not by Chance! Shattering the Modern Theory of Evolution, The Judaica Press, Brooklyn, New York, 1998. Return to text.

- Williams, ref. 5, p. 22. Return to text.

- I agree that organisms were designed to adapt. Polar bears have features no other bears have, although they surely share a common ancestor since the Flood. In the Coded Information System (CIS) proposal introduced in parts 3 and 4 we suggest that all designed components to achieve an intended outcome should be treated as refinement components and quantified in bits of information. Return to text.

- Williams, ref. 4, p. 38. Return to text.

- Bartlett, J., Irreducible Complexity and Relative Irreducible Complexity: foundations and applications, Occas. Papers of the BSG (15):1–10, 2010, www.creationbiology.org/content.aspx?page_id=22&club_id=201240&module_id=69414. Return to text.

- Shannon, C.E. and Weaver, W., The Mathematical Theory of Communication, University of Illinois Press, IL, p. 10, 1998. Return to text.

- Truman, R., The Problem of Information for the Theory of Evolution; Has Dawkins really solved it?, www.trueorigin.org/dawkinfo.asp. Return to text.

- Dawkins, R., The Information Challenge, www.skeptics.com.au/publications/articles/the-information-challenge/. Return to text.

- Gitt, W., Scientific laws of information and their implications—part 1, J. Creation 23(2):96–102, 2009. Return to text.

- Gitt, W., Implications of the scientific laws of information part 2, J. Creation 23(2):103–109, 2009; p. 103; https://dl0.creation.com/articles/p094/c09413/j23_2_103-109.pdf. Return to text.

- Professor Gitt invited me to co-author a book (which time did not permit) and I did review Without Excuse which he then wrote (see ref. 26). We considered seriously a transfer to his institute to permit closer collaboration, but I ended up turning the offer down for family reasons. Return to text.

- In Shannon terms, there seems to be some noise in the communication channel. Return to text.

- Gitt, W., In the Beginning was Information, CLV, Bielenfeld, Germany, p. 49, 1997. Return to text.

- Gitt, ref. 16, p. 101. Return to text.

- Gitt, ref. 16, p. 102. Return to text.

- Since the mind is not material, interaction with the material brain would not be subject to time and space constraints as we know them. The same is true for logic processing within the mind. Time constraints do arise from processes bound to the brain’s matter, like transmitting signals to different parts of the brain. Return to text.

- Virtually nothing is known about the processes occurring within the brain to manipulate memories. Several imaging techniques are used by neurophysiologists to identify signals and pathways involved, but how this is guided and the coding principles involved are unknown. At least 100 different specialized processing portions have been identified. See Gazzaniga, M.S., Ivry, R.B. and Mangun, G.R., Cognitive Neuroscience: The Biology of the Mind, WW Norton, New York, 3rd ed., 2009. Return to text.

- Data seems to be stored physically in the neurons of brains. But the mind must direct where to look for multimedia content (i.e. retrieval of all forms of sensory memories) and concepts (e.g. mathematical formulas, or heuristic principles) in this vast repository. Everything retrieved must be evaluated and perhaps new search strategies into the brain initiated. Huge amounts of content are transferred between the brain/mind interface. Return to text.

- Gitt, W., Compton, B. and Fernandez, J., Without Excuse, Creation Book Publishers, 2011, p. 70. Return to text.

- There are additional examples of code-based information systems in nature which can be used to argue there are laws of information. Examples include humans being able to create codes and the existence of other codes such as the bee’s waggle-dance and behaviour which guides instincts and migrations. Return to text.

- www.evoinfo.org/. Return to text.

- Schneider, T.D., Evolution of biological information, Nucl. Acids Res. 28(14):2794–2799, 2000. Return to text.

- Schneider, ref. 29, p. 2797. Return to text.

- Schneider referred to an essay I posted on the internet, but not to the one on information theory which addresses his own work: Truman, R., The Problem of Information for the Theory of Evolution. Has Tom Schneider Really Solved It? www.trueorigin.org/schneider.asp. His promised reply, posted on his web site and communicated to me via email over a decade ago, remains unfulfilled. Return to text.

- Dembski, W.A., No Free Lunch: Why Specified Complexity Cannot Be Purchased without Intelligence, Rowman & Littlefield, New York, 2002. Return to text.

- For example, a code must link the DNA sequence of letters with the appropriate amino acid. The message in genetics is transmitted by mRNA, which we saw could theoretically be compressed. An adaptor molecule is needed to associate portions of mRNA to the intended amino acid. But portions of different adaptor molecules would bind to mRNA for reasons which have no relationship with the wish to compress the length of coded messages. One portion of one adaptor might bind to twenty atoms on mRNA, another adaptor to six specific atoms. Chemistry would define this and not a willful need to compress a message. Return to text.

- Shannon, ref. 13, p. 5. Return to text.

- Shannon, ref. 13, p. 4. Return to text.

- Durston, K., Chiu, D.K.Y., Abel, D.L. and Trevors, J.T., Measuring the functional sequence complexity of proteins, Theoretical Biology and Medical Modelling 4(47):1–14, 2007; www.tbiomed.com/content/4/1/47/. Return to text.

- creation.com/information-theory-part2. Return to text.

Readers’ comments

Comments are automatically closed 14 days after publication.