Journal of Creation 22(2):85–91, August 2008

Browse our latest digital issue Subscribe

How life works

Life is not a naturalistic phenomenon with unlimited evolutionary potential as Darwin proposed. It is intelligently designed, ruled by immutable laws, and survives only because it has a built-in facilitated variation mechanism for continually adapting to internal and external challenges and changes. The essential components are: functional molecular architecture and machinery, modular switching cascades that control the machinery and a signal network that coordinates everything. All three are required for survival, so they must have been present from the beginning—a conclusion that demands intelligent design. Life’s built-in ability to adapt and diversify looks like Darwinian evolution, but it is not. Darwin’s theory of speciation via natural selection of natural variation is correct in principle, but it cannot be extrapolated to universal ancestry. What we see instead is different kinds of organisms having been designed for different kinds of lifestyles, with enormous potential for diversification built-in at the beginning, but with time this potential for diversification has become depleted by selection and degraded by mutations so that we are now rapidly heading towards extinction. Intelligent design and rapid decay point to recent Creation and Fall, as the Bible tells us.

Biologists have long sought the laws that govern life, but it is only now that we see the molecular detail that these laws have appeared. What we discover is not a naturalistic phenomenon, but intelligent design. In this article, I shall briefly outline the most important laws and how they work together for the survival of individual organisms, and their diversification into different species. Life’s evolutionary potential is not unlimited, as Darwin proposed, but limited to the permutations and combinations of what was built-in at the beginning. These limits are explored in a companion article.1

Pasteur’s law of biogenesis

The first person to discover a universal law of life was French chemist Louis Pasteur. Since ancient times, life forms that had obscure reproductive stages were thought to arise via spontaneous generation from non-living matter. Fermentation—the central process in wine and cheese making—occurs spontaneously, and when Pasteur began to research the matter he was able to show that microbes—not spontaneous generation—are the cause. He demonstrated in 1861 that microbes do not arise spontaneously from their growth medium, but from physical transmission of spores, some of which are carried in air. From this, he formulated a law of biogenesis—that life comes from life—a universal principle that has stood the test of time. Origin-of-life researchers continue to look for means of abiogenesis (life from non-life) but without success.

Darwinian evolution

In his 1859 famous book The Origin of Species, Charles Darwin proposed a universal law—that all species arise from a common ancestor through a collective struggle that leads to survival of the fittest. This produces diverse species when natural selection of random natural variations favours different survival strategies in different environments.

This theory is correct in principle within limits—the species we see around us today have indeed arisen from ancestral species via natural selection of natural variation. However, it is incorrect in its extrapolation to all of life, and is thus not a universal law. We shall see shortly that Darwin’s mechanism is just part of the larger law of survival.

Neo-Darwinists proposed that natural variation occurred primarily via mutation of genetic information. However, recent discoveries show that mutations are accidental damage events and are sending us all to extinction on a remarkably short time scale due to what appears to be the universally deleterious effects of these mutations.2

Life’s irreducible structure

In order to understand life, we need to know what it is made of. It consists mostly of architecture and machinery made from long-chain molecules having a ‘backbone’ of carbon atoms tightly linked together, with hydrogen, oxygen, nitrogen, phosphorus and sulfur attached along the sides. Many other elements are involved in other special ways as well. What makes life work is the peculiar manner in which these molecules are structured, and the intricate and super-efficient ways they work together as an integrated system.

Famous European polymath Professor Michael Polanyi described the principle of life’s irreducible structure3 in 1968, and it points unerringly to intelligent design.4 Polanyi argued that the special structure of life’s machine-like components cannot be explained by (or reduced to) the properties of the atoms and molecules they are made of; something else is required. He did not speculate on what the extra ingredient might be because molecular biology was in its infancy. However, we can now say with great certainty that it is coded information. The very precisely ‘engineered’ structures in living things are crafted by the cell, one molecule at a time, by carefully following the instructions coded on the DNA molecule.

Polanyi’s law of irreducible structure is a universal principle—life consists of information-driven molecular machinery.

Where does the information come from? According to Pasteur’s law of biogenesis, if ‘life comes from life’, then life’s information must come from its parent’s information.

The law of survival

Despite life’s marvels, it all dies. Why? Theologically, because of the Fall—Adam and Eve’s sin brought death into a perfect world.5 The biological question then is ‘How?’, and we shall see later that the answer is ‘mutations’. How then do species survive? In the original creation, reproduction glorified God in filling the earth with His creatures. In this fallen world, reproduction now solves the problem of species survival. For a species to survive, it must be capable of reproducing itself and passing on its store of functional information to its offspring.

However, if an organism were a simple mechanical structure, it would become extinct at its first malfunction. An intelligent designer must therefore build-in to it a self-repair mechanism. Anticipating the need for repair would also logically lead to a complementary routine maintenance system to avoid the more obvious hazards. This combination of self-maintenance and self-repair would allow life to explore beyond its normal range of operations, because any damage incurred could be repaired. Life that survives beyond its normal operating range could then be said to have adapted to a new set of conditions when frequent repair turns into routine maintenance.

But repair and maintenance mechanisms are subject to the same damage hazards as the rest of life, so a longer-term solution is required if a species is to survive. Reproduction is the answer—it starts again using fresh materials and the original recipe, to build a new organism that has less accumulated damage.

Another life-challenge is that environments have changed dramatically during Earth’s history, so an intelligent designer must build-in to the reproductive mechanism a system of continual variation that will produce a range of different capabilities in the offspring so that they might have a better chance of survival than the parents. Continual variation is necessary because if only a limited number of options were on offer, life would become ‘stuck’ on the most functional option, the alternatives would degenerate by mutation, and there would be no reserves to call upon during dramatic environmental change.

So we arrive at the law of survival—life must vary and adapt or become extinct. Life works only if it is robustly flexible (via self-maintenance and repair) to survive in this generation, and can reproduce itself in continuously variable forms to provide adaptability amongst its descendants. This condition is universal across the whole spectrum of life.

Some might object that ‘living fossils’ that are on the verge of extinction, such as the ‘dinosaur pine tree’ Wollemia nobilis6 are not showing any signs of genetic variation and thus contradict this law. Not so. This law applies to how the Creator made the original baramins, and not necessarily to the remnant populations we see today that have had their built-in original stores of variation exhausted by selection and depleted by mutation.

The law of facilitated variation

Life, by and large, is beautiful and inspiring and wonderfully adapted to seemingly endless ways of making a living. There are some ghastly forms—parasites can do terrible things to the living bodies of their hosts. But if we put aside our squeamishness we can still marvel at the fact that the parasite has developed astonishing ways to ‘earn a living and provide for its family’, often through multiple stages of very different life-forms in very different environments and/or host organisms.

Neo-Darwinists attributed all of this functional beauty to mutations and natural selection. But it has long been known that mutations produce defects and monsters, not beautifully functional adaptations to different ways of life. According to Kirschner and Gerhart’s theory of facilitated variation,7 the enormously varied but functional beauty of life results from a combination of three fundamental components:

- robust core processes of cell structure, function and body plan,

- modular regulatory mechanisms that can be easily pulled apart (like Lego® blocks) and rearranged into new circuit-and-switch combinations that generate variation by activating different components in new times, places and amounts during embryonic development,

- signaling systems to coordinate everything.

Organisms have a built-in capability for variation which facilitates changes via an integrated modular structure that is able to maintain functionality in the face of internal and external challenges.

We know of no life form that does not have these three components (I shall call them the ‘Kirschner–Gerhart properties’) so they constitute a universal principle, the law of facilitated variation—species survival requires:

- a robust core structure,

- a regulatory system that provides built-in capacity for variation through randomly rearranged module combinations, and

- a signaling system to coordinate and maintain the process.

Variation and stasis

Species survival requires a balance between variation and stasis. To create life, there would be no point putting together a molecular machine that reproduced perfect copies of itself. It could not adapt to changing conditions, either in the current generation, or amongst its descendants. There is also no point putting together a machine that continually varies itself, because its variations would escalate into error catastrophe.8 Neither kind of machine will work on its own, because both kinds are needed together. Continuous variation has to be achieved in a manner that is compatible with—and thus limited by—the necessity for continuous function.

This principle is illustrated in games of chance with tossed coins or dice, or a spinning roulette wheel (figure 1). A continuously variable outcome depends upon maintaining the rigid mechanical structure and continuous functional performance of the mechanism.

The law of inverse causality

The law of facilitated variation turns causality on its head and takes us out of the realm of physics and chemistry. To understanding this crucial statement, we need to look at the law of cause and effect.

The law of cause and effect

The law of cause and effect is one of the most fundamental in all of science. Every scientific experiment is based upon the assumption that the result of the experiment will be caused by something that happens during the experiment.

Now a naturalistic origin-of-life scenario must explain life in terms of a series of naturalistic causes. The first cause must produce the second step, which then causes the third step, and so on. The logic must be in the following order:

A → B → C → … Z → life

where the arrow means ‘causes’ and A would be either chemicals (in a proteins-first scenario) or information (in an information-first scenario). All naturalistic theories are bound by this principle of causality because they do not admit any purpose in the process that could manipulate the steps towards a predetermined goal, nor is any intelligent agent able to help.

But according to the law of facilitated variation we have to rule out such a scenario because none of the steps A to Z have the necessary properties to survive. The Kirschner–Gerhart properties define the necessary conditions for survival and they are not present in the A to Z series, only in the ‘life’ at the end of the series.

If Darwin had conducted an origin-of-life experiment, he could justifiably have begun with chemicals in ‘a warm little pond’ because the molecular basis of life was not understood at that time. However, we now know too much to allow such a thing. Because we now know the laws of survival and facilitated variation, an intelligent designer would have to begin with that end in sight. To have anything less in mind would be to decide upon failure.

To have a particular end-product in mind is a case of inverse causality. In normal causality, the cause always comes before the effect. In biology however, we see the universal occurrence of inverse causality, recently acknowledged by Darwinian philosopher of science Michael Ruse.9 His chosen example was that stegosaur plates begin forming in the embryo but only have a function in the adult—supposedly for temperature control. Other examples include the adult’s need for robustness in the face of environmental challenges that the zygote has not yet faced, and the adult’s need for successful reproduction of variable offspring that is essential to its species’ survival. All these lie in the zygote’s future but must be present in the zygote.

For the zygote to have even arrived where it is, all of these events must have been in view in its progenitors’ developmental program as well as in its own developmental program, otherwise life would have become extinct. This is why the ability to survive takes us out of the realm of physics and chemistry—and into the realm of intelligent design. In physics and chemistry, normal causality rules. In life, inverse causality rules. Although life uses the normal causality of physics and chemistry, it is not bound by its rules.

This characteristic is universal in all forms of life, so it constitutes a law of inverse causality—the Kirschner–Gerhart properties inversely cause the development of the adult from the zygote and they produce the adaptive variety necessary for survival. Since all this must be present at the beginning for species to survive, intelligent design is the only possible explanation.

The law of code variation

How does life manage to transcend normal causality and move into inverse causality? The answer is coded information! It is through the coded information in our genomes that inverse causality rules biology. The adult end-product of development is coded into the zygote’s genome. The genome guides the zygote to fulfil a destiny that was written down before the event occurred. It could be no other way, because life that lacked the Kirschner–Gerhart properties could not survive.

This leads us to another universal law. The law of code variation, which is that the Kirschner–Gerhart properties are encoded in the zygote, and it is through the zygote’s built-in ability to read, implement, rearrange and replicate this coded information that the Kirschner–Gerhart properties inversely cause the development of the organism, the production of its variable offspring, and its ability to adapt and survive.

Life does its own natural genetic engineering,10 illustrated most clearly in the fact that all our tools for genetic engineering have come from living organisms. It occurs in eukaryotes during the remarkable process of meiosis when the cell cuts the father’s and mother’s chromosomes up into segments and rearranges them so that the gene combinations get mixed up. A number of other enzyme-mediated changes can also occur—deletions, insertions, inversions, duplications, transpositions and retro-transpositions.

Microbes (bacteria and viruses) have the added ability to splice foreign DNA in and out of their genomes, thereby ‘sampling’ the genetic environment for potentially useful sequences. The (eukaryote) single-celled ciliate Oxytricha trifallax, displays an extraordinary talent for natural genetic engineering. It has two nuclei in its single cell, one large and one small. The large nucleus carries out the everyday activities of life, and the small nucleus remains quiet until it is time to reproduce. At reproduction, the small nucleus undergoes meiosis, but the chromosomes in the large nucleus are chopped up into hundreds of thousands of separate pieces. In the new daughter cells, all these fragments are then re-assembled into chromosomes in a new large nucleus.11

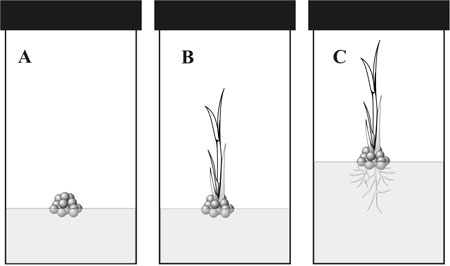

Compartments, modules and signals

Development of the zygote into an adult is organized by the use of compartments, modules and signals of different kinds, and these can operate independently, yet in a cooperative manner so as to produce a functional whole organism. We can illustrate the basic principles in both plant and animal development with two simple experiments.

Figure 3. A plananrian flatworm is a free-living freshwater creature with two eyespots

at one end and a feeding tube at the other end (A). When cut in

half, each of the two halves normally regenerates a complete organism (B).

However, when the beta-catenin signaling system is blocked, the head end

regenerates another head and the tail end regenerates another tail (C).

Figure 3. A plananrian flatworm is a free-living freshwater creature with two eyespots

at one end and a feeding tube at the other end (A). When cut in

half, each of the two halves normally regenerates a complete organism (B).

However, when the beta-catenin signaling system is blocked, the head end

regenerates another head and the tail end regenerates another tail (C).

Many plants are easily grown in cell culture by taking some stem cells from a growing tip of a root or shoot, and placing them on a sterile growth medium (figure 2). After a few days, they will multiply and produce a mass of undifferentiated cells. If a drop of cytokinin is then added, the cells will begin to organize into a shoot, with stem and leaves, but with no root. If the half-plant is then transferred to a new growth medium with auxin in it, a root system will develop and we will soon have a whole plant with both roots and shoots.

A comparable system in animals is seen in the planarian flatworm. Planarians are free-living freshwater animals that have the remarkable ability to regenerate themselves after being cut in half! The signaling system that controls head and tail regeneration was recently identified by experimentally interfering with signals to see what would happen (figure 3). When the β-catenin signaling pathway was blocked, a head developed at the tail end of the head section, producing a two-headed flatworm, and a tail developed at the head end of the tail section, producing a two-tailed flatworm.12

Now cytokinin, auxin and β-catenin are just protein molecules. They do not carry any coded instructions like RNA or DNA, so they are unable to tell the cells what to do or how to do it. They are simply signaling molecules that carry a GO/STOP message.

The plant cells already have the built-in ability to produce a whole plant—it only needs to be switched ON. But notice that the top part of the plant can develop quite independently of the bottom part. Likewise in the planarian flatworm, the head end and the tail end can develop independently of one another. This is compartmentation. Organisms are arranged into compartments so that development can proceed in one compartment independently of what happens in an adjoining compartment. But notice also that adjoining compartments cooperate at the joining edges so that the development in each compartment is smoothly integrated with its neighbours. Compartments also exist at smaller scales, for example leaves, stems, flowers, etc. in plants, and limbs, head, alimentary tract, etc. in animals. The human embryo contains about 300 different compartments.

Within each compartment there are numerous modules that carry out specific tasks. For example, energy supply is universally provided in all forms of life by ATP (adenosine tri-phosphate)13 via a proton-driven molecular motor. In eukaryotes, these are especially associated with mitochondria as the ‘powerhouses’ of cells. So there will be modules that contain the information to make ATP motors and modules that contain the information to make mitochondrial powerhouses. These components are then activated or repressed by the signaling network in a GO/STOP manner to provide energy for and synchronize the daily round of metabolic activity.

The law of signals

The universal rule governing cell signaling is that they are permissive and not instructive. They are GO/STOP messages, and do not contain any instructions as to what is supposed to be done or not done (i.e. see figure 4). The modules in the cell must therefore already possess the information for what to do and how to do it, and further possess the information required to interpret what the GO signal is and what the STOP signal is. All that the signal network needs to do is to send the right sequence of GO and STOP signals for development to proceed from zygote to adult.

This law of signals is that signaling networks are permissive and not instructive.

A crucial consequence of this law is that modules must contain, and maintain, certain basic properties, which are the subject of the law of modules.

The law of modules

A module in engineering is a unit that has a stable internal structure and function such that it can be connected to a number of different other systems and can interact with them, but without the interaction interfering with its own internal structure or function.14 Kirschner and Gerhart attribute life’s built-in ability to vary continually to the modularity of its regulatory system. Modularity in the regulatory domain is concerned with information content.

In an earlier article on biological information, I pointed out that Shannon’s theory of information is entirely inappropriate in biology because it ignores the four dimensions of meaning in the genetic code.15 If we apply the engineering definition of a module to information, we see that its crucial property is to be able to use the information in a variety of ways while keeping it together in its appropriate context so that its meaning is preserved. This is a fundamental point that is entirely lost upon those who use Shannon theory to study biological information, and who rely upon neo-Darwinian accidents (mutations) to drive the course of evolution.

Meta-information

In neo-Darwinian theory, the only relevant information in DNA was thought to be that which codes for proteins (genes). The rest was thought to be ‘junk’ leftovers from the past, or useless duplicates of currently functional genes. But when we look beyond the Shannon definition of information and begin to see the other four dimensions of meaning, we come across an entirely different kind of information that is called meta-information. Meta-information is information about information; akin to metadata in the computer world.

Meta-information in biology is information about how to use the information passed on to the zygote from the parents. A living organism needs to have the following kinds of meta-information:

- How to read the information inscribed on, in and around the DNA molecule.

- When to read what bits of information inscribed on, in and around the DNA molecule.

- What to do with the information once it is read from the DNA.

- How to regulate the mechanical structures that implement the information.

- How to repair and maintain the information store and the mechanisms that use it.

- How to pass on the information store and its support systems to the next generation.

There are two crucial features of meta-information that confound all naturalistic explanations for the origin of new biological information. First, it cannot come into existence by a spontaneous random process. A random event is, by definition, one that occurs independently of other such events. But meta-information is, by definition, entirely dependent upon the information that it relates to. That is, it has no meaning or purpose apart from the information that it relates to. It therefore cannot come into existence by any kind of independent process.

Second, without it, the basic information is of no use. For example, a zygote could have all the genes required to turn it into a human being, but without the necessary meta-information to instruct the cell in how and when to use which genes, the genes themselves would be useless. Information and meta-information are mutually inter-dependent—each is useless without the other.

Meta-information is the information you need to have in order to use the information you want to have to provide you with the capacity for your survival and the survival of your descendants and your species.

We now have two more universals that constitute laws of life. First, conserved core functional machinery must be coded in two different kinds of functional information—the primary functional information (mostly genes), and the meta-information needed to implement the primary information (mostly regulatory information). Second, in order to maintain the functional integrity of life, the conserved core information must be kept together in modules that are difficult to break apart, but whose signal circuit connections can be pulled apart and put together again in different ways to produce a built-in system of variation.

The law of modules is that the basic module of information has to contain functionally integrated primary information plus the necessary meta-information to implement the primary information. This information must consist of what to do, how to do it, what the GO signal is and what the STOP signal is. This information is not necessarily in coded form—it may be designed into a mechanism that has the required properties.

Mutations

We can now define a mutation more precisely as an accident that disrupts the meaning contained in a module or in the signal that activates or represses it. Rarely, a mutation can have survival value—sickle-cell trait in human populations exposed to malaria is a classic case. But too many accidents will lead to more certain extinction, as disruptions are far, far more often deleterious than beneficial and in no cases lead to an increase in information.

There is a huge difference between mutations that cause random accidental damage and the random change capacity that is built-in to the facilitated variation system. Independent segregation and random recombinations of alleles that occur during sexual reproduction produce useable phenotypic variation and do not degrade the machinery of life or its genome. Other modular rearrangements such as insertions, deletions, transpositions and retro-transpositions can also provide potentially useful new combinations. This illustrates why Kirschner and Gerhart used the analogy with Lego® blocks—random rearrangements of blocks does not degrade the integrity of the blocks themselves.

The law of degeneration

Genomes degenerate over time for two main reasons. First, natural selection is a process of individual extinction—organisms that die without reproducing take their unique store of built-in variation with them to the grave, making it no longer available to future generations. Second, like all organic molecules, DNA is inherently unstable and it accumulates molecular damage with time.

A typical human cell would undergo 2,000 to 10,000 spontaneous DNA hydrolysis damage events every day just because it is an aqueous environment.16 In addition, DNA is constantly consulted for information during daily metabolism and this causes transcription stress fractures, fork collapse and polymerase fidelity errors. Reactive oxygen species (ROS) produced during normal metabolism cause biochemical disruptions. Environmental toxins and ionizing radiation cause direct physical/chemical damage and indirect injuries through ROS production. Further physical damage arises when cell division pulls chromosomes apart. And at meiosis, during nuclease-mediated genome rearrangements, and during various kinds of recombination events, errors and breaks in the nucleotide sequence regularly occur.

The only reason that DNA functions as well as it does is that cells come equipped with an amazing array of cooperative DNA repair mechanisms. For example, polymerase replication during cell division might produce 6 million errors per cell, but then proofreading machinery can reduce this to 10,000 and then mis-match repair machinery could reduce this to 100. It appears to be impossible, however, to replicate the 6 billion nucleotides in a human cell in a completely error-free manner.17

All of life’s machinery operates at extremely high cost in molecular damage. In an earlier article,2 I gave examples of molecule turnover rates on high-wear surfaces in the order of 30 seconds, and each cell in our body typically experiences in the order of ten DNA damage-and-repair events every second. Another evidence is that of the approximately 100 trillion cells in an adult human body, about 70 to 90 billion are dismantled and recycled each day because of irreparable damage, with greatest turnover in high usage areas like blood, intestinal tract and skin.18 The cell’s built-in repair and maintenance systems also suffer molecular damage and so they are unable to attain 100% efficiency, hence inevitable degeneration.

It is not only genetic degeneration that is inexorable, but also epigenetic degeneration. Epigenetic mechanisms are increasingly being seen to be crucial to how DNA functions. A multitude of epigenetic mechanisms are coming to light and more may yet be anticipated. They include the side chains that are attached to the DNA and control the expression of the associated nucleotides, histone-based nucleosome patterning, chromatin structure, positioning of DNA segments within the nucleus, and transcription and post-transcription errors and modifications.

A large study of identical twins showed that their gene expression patterns were very similar while young, but differences accumulated with age, and this can be largely and perhaps entirely attributed to the accumulation of epigenetic defects. Their DNA remains identical, but their epigenetic patterns of DNA expression change in different ways during life. Environment plays a big role in this; different lifestyles produced greater epigenetic differences than identical lifestyles. The authors concluded that accumulation of epigenetic defects would probably occur at a faster rate than genetic mutations because the consequences for survival are probably less dramatic and cells do not have repair mechanisms to correct them.19

We therefore come to a law of degeneration that the rate of both genetic and epigenetic damage exceeds the capacity of the cell to repair it, and most of the damage is too phenotypically insignificant for natural selection to detect and remove from the population. The net result is inexorable degeneration of organisms and their genomes. Estimates based upon a number of different numerical models indicate a time to extinction of tens to hundreds of thousands of years.2

Conclusion

Life is intelligently designed to survive via a built-in system of facilitated variation that enables organisms and their offspring to adapt to changing conditions. This leads over time, and across different environments, to diversification of species. Charles Darwin was correct in proposing that the species we see around us today have arisen via the mechanism of natural selection of natural variation, but he was wrong in extrapolating it to all life. Organisms designed with the capacity for facilitated variation would have had enormous initial capacity for rapid diversification from one generation to the next, and vast numbers of new species could have rapidly filled the early earth and rapidly re-colonized the destroyed earth within a few generations after the Flood. However, natural variation is limited by what was built-in to begin with—regulatory signals cannot switch ON features that don’t exist in the organism’s genome. Despite life’s functional beauty, selection depletes gene pools and mutations degrade genomes, and extinction is coming on a time scale of only thousands, not billions, of years. Intelligent design plus rapid extinction point clearly to recent Creation and Fall, as the Bible tells us.

Acknowledgments

I am grateful for the comments from three referees and numerous colleagues which have helped to improve this article.

Recommended Resources

References

- Williams, A.R., Molecular limits to natural variation, Journal of Creation 22(2):97–104, 2008. Return to text.

- Williams, A.R., Mutations: evolution’s engine becomes evolution’s end, Journal of Creation 22(2):60–66, 2008. Return to text.

- Polanyi, M., Life’s irreducible structure, Science 160:1308–1312, 1968. Return to text.

- Williams, A.R., Life’s irreducible structure, part I: autopoiesis, Journal of Creation 21(2):109–115; Part II: naturalistic objections, Journal of Creation 21(3):77–83, 2007. Return to text.

- Genesis 1–3; Romans 5:12; 8:18–25; 1 Corinthians 15. Return to text.

- Official website of the Wollemi Pine, <www.wollemipine.com/index.php>, 22 May 2008. Return to text.

- Kirschner, M.W. and Gerhart, J.C., The Plausibility of Life: Resolving Darwin’s Dilemma, Yale University Press, New Haven, CT, 2005. See also, Williams, A.R., Facilitated variation: a new paradigm emerges in biology, Journal of Creation 22(1):85–92, 2008. Return to text.

- Crotty, S., Cameron, C.E. and Andino, R., RNA virus error catastrophe: direct molecular test by using ribavirin, PNAS 98(12):6895–6900, 2001. Return to text.

- Ruse, M., Darwin and Design: Does Evolution have a Purpose? Harvard University Press, Cambridge, MA, 2003. Return to text.

- Shapiro, J.A., Bacteria are small but not stupid: cognition, natural genetic engineering and socio-bacteriology, Studies in History and Philosophy of Biological and Biomedical Sciences 38:807–819, 2007. Return to text.

- Nowacki, M., Vijayan, V., Zhou, Y., Schotanus, K., Doak, T.G. and Landweber, L.F., RNA-mediated epigenetic programming of a genome-rearrangement pathway, Nature 451:153–158, 2008. Return to text.

- Cesari, F., Cell polarity: heads or tails? Nature Reviews Molecular Cell Biology 9:94–95, 2008. Return to text.

- ATP is the most common of a class of similar energy-packaging molecular machines. Return to text.

- Sauro, H.M., Modularity defined, Molecular Systems Biology 4:166, 2008; Del Vecchio1, D., Ninfa, A.J. and Sontag, E.D., Modular cell biology: retroactivity and insulation, Molecular Systems Biology 4:161, 2008. Return to text.

- Williams, A.R., Inheritance of biological information, Part I: The nature of inheritance and of information, Journal of Creation 19(2):29–35, 2005. Return to text.

- Lindahl, T., Instability and decay of the primary structure of DNA, Nature 362:709–715, 1993. Return to text.

- McCulloch, S.D. and Kunkel, T.A, The fidelity of DNA synthesis by eukaryotic replicative and translesion synthesis polymerases, Cell Research 18:148–161, 2008. Return to text.

- Apoptosis, <en.wikipedia.org/wiki/Apoptosis>, April 2, 2008. Return to text.

- Fraga, M.F. et. al., Epigenetic differences arise during the lifetime of monozygotic twins, PNAS 102(30):10604–10609, 2005. Return to text.

Readers’ comments

Comments are automatically closed 14 days after publication.