Supplementary information for “The AI Revolution: what it means for you”

Background

AI programs can do the tasks they do because they have learnt how to do them through a process called machine learning. AI models can learn because they use computer models called neural networks which are designed to mimic the structure and function of the neural networks in the human brain. The artificial neurons in the network are connected to one another such that each neuron receives input values from many other neurons, performs a computation on the input values, and then passes the output values of the computations onto many other neurons (figure 1A). This is similar to the way that our brains are thought to compute information.

This supplementary text will go into a bit more detail than the main article. For those who wish to dive even deeper, I recommend checking out the references.

The computation that each neuron performs

The computation that each neuron performs has two important parameters (numbers)—weights and biases (refer to figure 1B as you read the next few sentences to understand what is going on). Each connection between two neurons has a weight value. This is just a number that the output of a neuron is multiplied by before it is received by the neuron at the other end of the connection. The receiving neuron then sums up all of its input values to get a new value called the weighted sum. Each neuron has a bias value that it adds to the weighted sum it calculates. The output of this equation is then put through one last equation (such as a sigmoid function, for you mathematicians out there) before being passed on to other neurons.

One way neural networks differ is in their architecture (the pattern of how the neurons are wired together). Different neural network architectures are used depending on what the AI model is being trained to do.1

How AI models learn

A neural network learns by changing the values of the weights and biases such that it improves over time. Imagine that a neural network is learning to predict the next word in a sentence. The sentence can be converted into numbers, run through the neural network (with each neuron performing the calculations explained above) and then the output numbers can be converted back into a word which is the prediction of the neural network. During training, through some complex calculus, the neural network can calculate how each weight and bias value needs to be changed (up or down and by how much) such that its predictions more closely match the true next word. This process of changing the values of the weights and biases is called backpropagation.2 It is this process of backpropagation that allows an AI model to learn almost anything, provided that it is given good training data.

Large Language Models

Most Large Language Models (LLMs), such as ChatGPT, work by predicting the next word over and over again. Let’s use the following sentence as an example:

“Creation Ministries International …”

LLMs perform a calculation that essentially asks, “Given the words already written, and the patterns of language I have learnt from my training data, what might the next word be?” They come up with a list of words and assign a probability to each word that it is the next word. In this case, ChatGPT predicts the following possible words:

“Creation Ministries International is …”

“Creation Ministries International focuses …”

“Creation Ministries International provides …”

“Creation Ministries International has …”

“Creation Ministries International advocates …”

“Creation Ministries International hosts …”

“Creation Ministries International engages …”

“Creation Ministries International publishes …”

LLM’s then select one of these words and adds it to the sentence. Different LLM’s use different methods to choose which words in the list to choose. After adding the word to the sentence, LLM’s repeat the calculation of predicting the next word. LLMs do this over and over again to write long paragraphs of text. These are the basics of how ChatGPT works.

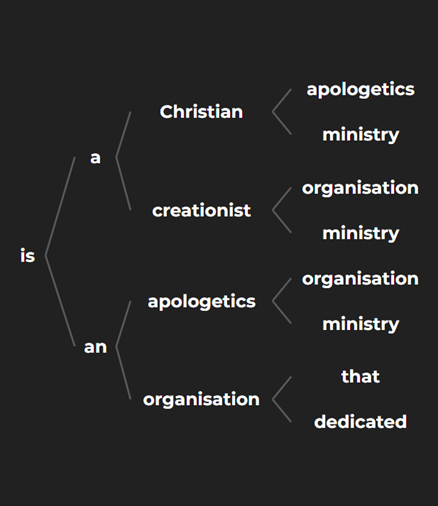

Figure 2 shows a tree of ChatGPT’s predictions for the next four words in the sentence.

AI programs can also learn how to increase a reward (such as wins in a game) through these same principles; except rather than changing the weights and biases to make their predictions better match reality, they are changing their weights and biases to increase their rewards. By playing many matches of a game, an AI program can calculate how each weight and bias needs to change for it to win more often.

This method can also be used to improve an AI with human feedback. Humans can rate the output of an AI model and the AI can modify its weights and biases to increase the ratings humans give it. For example, this can help an AI draw images that are more visually stunning and better match the style humans want (this was done to improve Midjourney V4 and V5). Human feedback was also used to improve ChatGPT’s responses. This is another way that ChatGPT could have acquired its current materialistic, humanistic bias, since the humans giving it feedback may have preferred such answers.

Getting humans to give feedback to an AI model to help it improve can take a very long time and is a lot of work for humans. One method used to get around this is to train a second AI to predict what rating a human would give the output from the first AI model. Once this secondary model is very good at this, it can take over the role of the humans and can train the first AI model much faster than humans could.

So in summary, an AI model learns by performing very complex calculus to determine what the optimum values of its weights and biases are for it to do the task it is trained to do.

Here, I have only given a very basic explanation for a very complex topic and there are many arguably important things I have left out. If you are interested in diving deeper into how neural networks work, I highly recommend watching 3Blue1Brown’s YouTube series on Neural Networks.2

Watermarking AI-generated text

In an effort to prevent students from cheating by getting a chatbot to write an assignment for them, at the time of writing this article, there is work being done to watermark AI-generated text in a way that is undetectable by humans but can be detected by a computer with high statistical certainty. There are many ways this could be done. One way would be as follows: Every time an AI predicts a list of the possible next word to add to the sentence based on the previous words, it could split its list into a ‘green list’ and a ‘red list’. This splitting of the list would be done in some predetermined way depending on, for example, the previous word in the sentence. The values that determine the likelihood that the AI will select a word in the list can be slightly decreased for the words in the red list and slightly increased for the words in the green list. The AI can then select a word, with its selection slightly biased towards words in the green list. The chatbot would repeat this process for every word it adds to the text.

An AI detector could then look at every word in a piece of text it is given and determine if each word would be on the red list or green list of the AI (based on the method the AI used to generate the red lists and green lists). If the text is written by an AI, there will be a clear bias towards green list words (i.e., there will be far more green list words than red list words). If the text is written by a human there will be about a 50-50 split of green list and red list words. The AI would only have to have a relatively slight bias towards green list words for an AI detector to detect that some text is written by an AI with high statistical confidence.3

If this method is so effective, why is it not already integrated? Firstly, it requires more computation which makes the chatbots more expensive to run. Secondly, at present the method can easily be worked around. For example, if the red lists and green lists are generated based on only the previous word, a student can get it to write their assignment but ask it to add an emoji between every word. The student can then remove all of the emojis and the AI detector would not be able to detect it as AI-generated text (because the previous words – the emojis – that the red lists and green lists were generated on have been removed).4

Similar, but likely more complex, watermarking techniques could also be used to watermark AI-generated images.

More advances in game-playing AI’s

MuZero, a more recent AI by DeepMind, is as good as AlphaZero at Chess, Shogi and Go but can also play Atari games, teaching itself entirely from scratch with each new type of game.5 MuZero is not even told the rules of a game; it has to figure them out from scratch as well. This could have huge implications for teaching AI models how to do tasks in the real world where “the rules of the game” are not known.6 MuZero has already been used to help improve video compression on YouTube.7

In 2019, DeepMind released AlphaStar, an AI that can beat world champions at the strategy video game StarCraft II.8 This is significant because of how incredibly complex StarCraft II is and because of the level of rapid, long-term strategic thinking required to play the game.

OpenAI developed an AI called OpenAI Five that can defeat world-champion players at the video game Dota 2, said to be one of the hardest e-sports games.9 Like AlphaZero, it learnt the game from scratch by playing against itself.

Neural Radiance Fields (NeRFs)

One other advance in AI I am personally excited about is Neural Radiance Fields (NeRFs). A NeRF is an AI model that predicts what a 3-dimensional scene would look like from any perspective based on one or more input images of the scene.10 Using a NeRF, you can change the angle that a photo was taken from or create a highly-detailed 3D model of your house from a few photos in each room. The results can be very impressive: The images it synthesizes of novel views look like they were original photos.11 Movie directors can use NeRFs to convert frames from a video into a 3D model that the director can move a virtual camera through to get shots from angles that were never captured by the original video. NeRFs are likely to have a significant impact on photography and videography. Watch this space!

Google is now also integrating NeRFs into Google Maps, allowing anyone to explore cities and buildings in an immersive, highly-detailed 3D view.12 In the future, Street View might not be a series of still photos but a highly-detailed NeRF that you can explore from any angle.

AI—why now and not earlier?

In the first quarter of 2023, I researched the question: Why is the AI revolution happening now and not earlier? Three main factors were apparent.

- The increase in computing power. It has been known for quite some time—decades, in fact—that it would be possible to create large neural networks, but the huge computing power needed has only recently become available.

- The availability of good training data. Many AI models are only as good as the data they are trained on. Thus, in order to have advanced AI models, having a large amount of good training data is important. Before the internet, it was hard to get such large databases of good training data. The rise and growth of the internet created a huge database of text and images on which AI models can be trained. Programs like Midjourney and ChatGPT would not be possible without this.

- The invention of the transformer. The transformer referred to here has nothing to do with the electrical transformer, such as that used to charge your phone from a high voltage power outlet. It is the name given to a type of neural network developed by Google, one that is very good at learning the relationships between words and sentences. Its design also made it easier to train on large amounts of data, among other benefits. This led to huge breakthroughs in natural language processing (NLP) which allowed for the development of AI models with advanced conversational abilities (like ChatGPT). It was also realized that images, video, audio, and the like can be treated as languages, allowing the transformer to learn the relationships between text and images, text and audio, video and audio, etc. This led to the development of text-to-image AI models (like DALL·E 2). In the same way that a transformer can translate between English and French, the transformer can translate between text and images, etc. Another benefit of this is that any development in one area (such as in image processing) automatically leads to benefits in other areas (such as audio processing). This is part of the reason there has been a huge increase in the rate of development.

References and notes

- Baheti, P. The Essential Guide to Neural Network Architectures, v7labs.com, 8 Jul 2021. Return to text.

- 3Blue1Brown, Neural networks, youtube.com. Return to text.

- The following New York Times article discusses a very similar watermarking method; Collins, K., How ChatGPT Could Embed a ‘Watermark’ in the Text It Generates, nytimes.com, 17 Feb 2023. Return to text.

- Kirchenbauer, J., and 5 others, A Watermark for Large Language Models, arxiv.org, 27 Jan 2023. Return to text.

- DeepMind, MuZero: Mastering Go, chess, shogi and Atari without rules, deepmind.com, 23 Dec 2020. Return to text.

- DeepMind, ref. 5. Return to text.

- DeepMind, MuZero’s first step from research into the real world, deepmind.com, 11 Feb 2022. Return to text.

- DeepMind, AlphaStar: Mastering the real-time strategy game StarCraft II, deepmind.com, 24 Jan 2019. Return to text.

- Open AI, OpenAI Five, openai.com. Return to text.

- Datagen, Neural Radiance Field (NeRF): A Gentle Introduction, datagen.tech. Return to text.

- See examples of NeRFs here: Two minute papers, NVIDIA’s New AI: Wow, Instant Neural Graphics!, youtube, 20 Feb 2022, https://www.youtube.com/watch?v=j8tMk-GE8hY. Return to text.

- Philips, C. New ways Maps is getting more immersive and sustainable, blog.google, 8 Feb 2023. Return to text.

Readers’ comments

Comments are automatically closed 14 days after publication.